PhD student @ HKU CDS

PhD student @ HKU CDS Undergrad student @ HUST EIC

Undergrad student @ HUST EICHi! I am a first year PhD student at The University of Hong Kong (HKU), School of Computing and Data Science, supervised by Prof. Hengshuang Zhao. Previously, I received my B.Eng. from the School of Electronic Information and Communications at Huazhong University of Science and Technology (HUST) with a GPA of 3.98/4.0 (Rank: 1/155). During my undergraduate studies, I was fortunate to be supervised by Prof. Xiang Bai. My current research interests primarily lie in Multimodal Large Language Models and Reinforcement Learning.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

The University of Hong KongSchool of Computing and Data Science

The University of Hong KongSchool of Computing and Data Science

Ph.D. StudentSept. 2025 - Present -

Huazhong University of Science and TechnologyB.S. in Electronic Information and CommunicationsSept. 2021 - Jul. 2025

Huazhong University of Science and TechnologyB.S. in Electronic Information and CommunicationsSept. 2021 - Jul. 2025

Experience

-

TiktokResearch InternNov. 2024 - Present

TiktokResearch InternNov. 2024 - Present

Honors & Awards

-

National Scholarship2024

-

National Scholarship2023

-

National Scholarship2022

-

Outstanding Undergraduate2023

-

Meritorious Winner in Mathematical Contest In Modeling (MCM)2023

News

Selected Publications (view all )

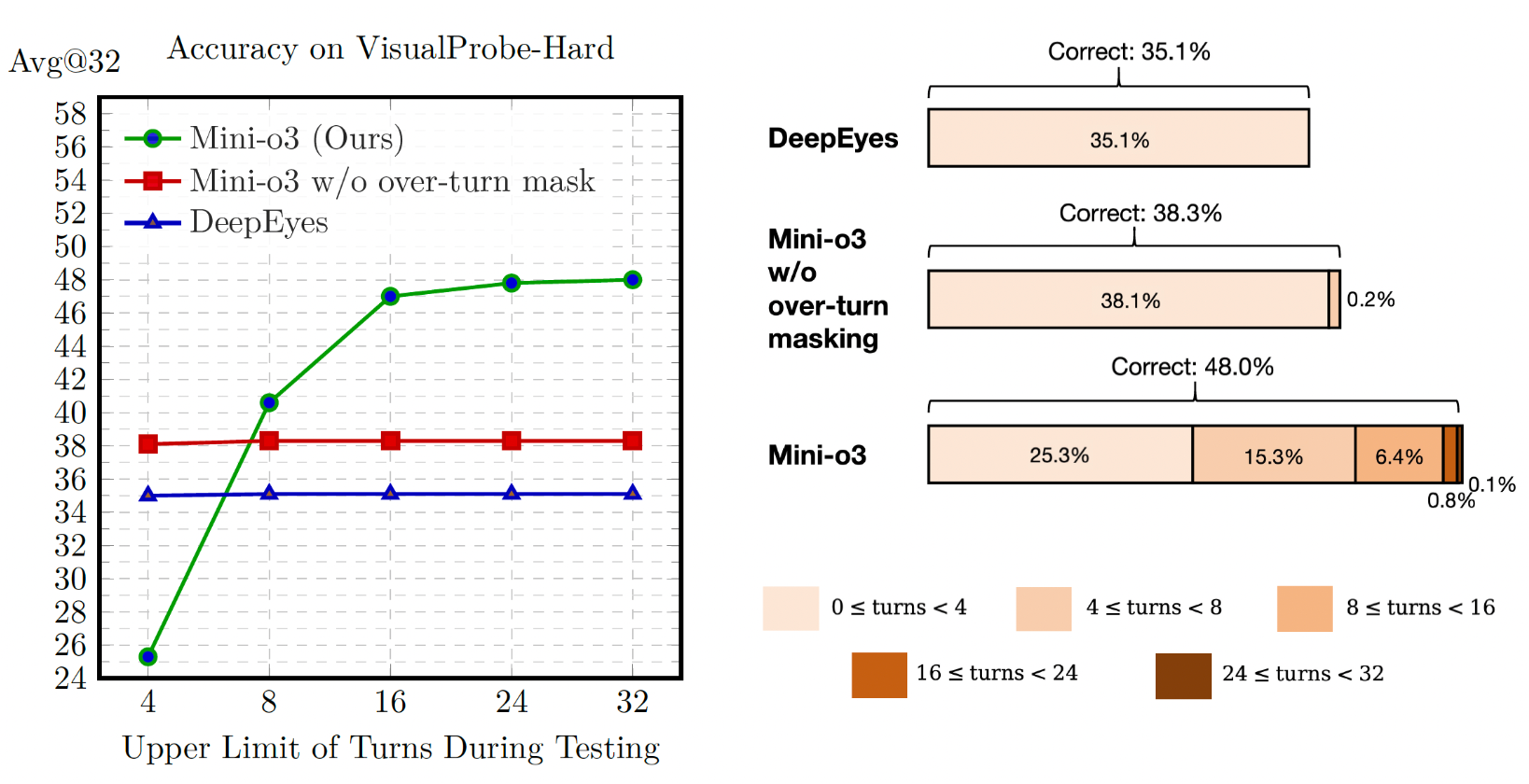

Mini-o3: Scaling Up Reasoning Patterns and Interaction Turns for Visual Search

Xin Lai*, Junyi Li*, Wei Li, Tao Liu, Tianjian Li, Hengshuang Zhao# (* equal contribution, # corresponding author)

Under review. 2025

A full training recipe to reproduce OpenAI o3-style thinking-with-images capability.

Mini-o3: Scaling Up Reasoning Patterns and Interaction Turns for Visual Search

Xin Lai*, Junyi Li*, Wei Li, Tao Liu, Tianjian Li, Hengshuang Zhao# (* equal contribution, # corresponding author)

Under review. 2025

A full training recipe to reproduce OpenAI o3-style thinking-with-images capability.

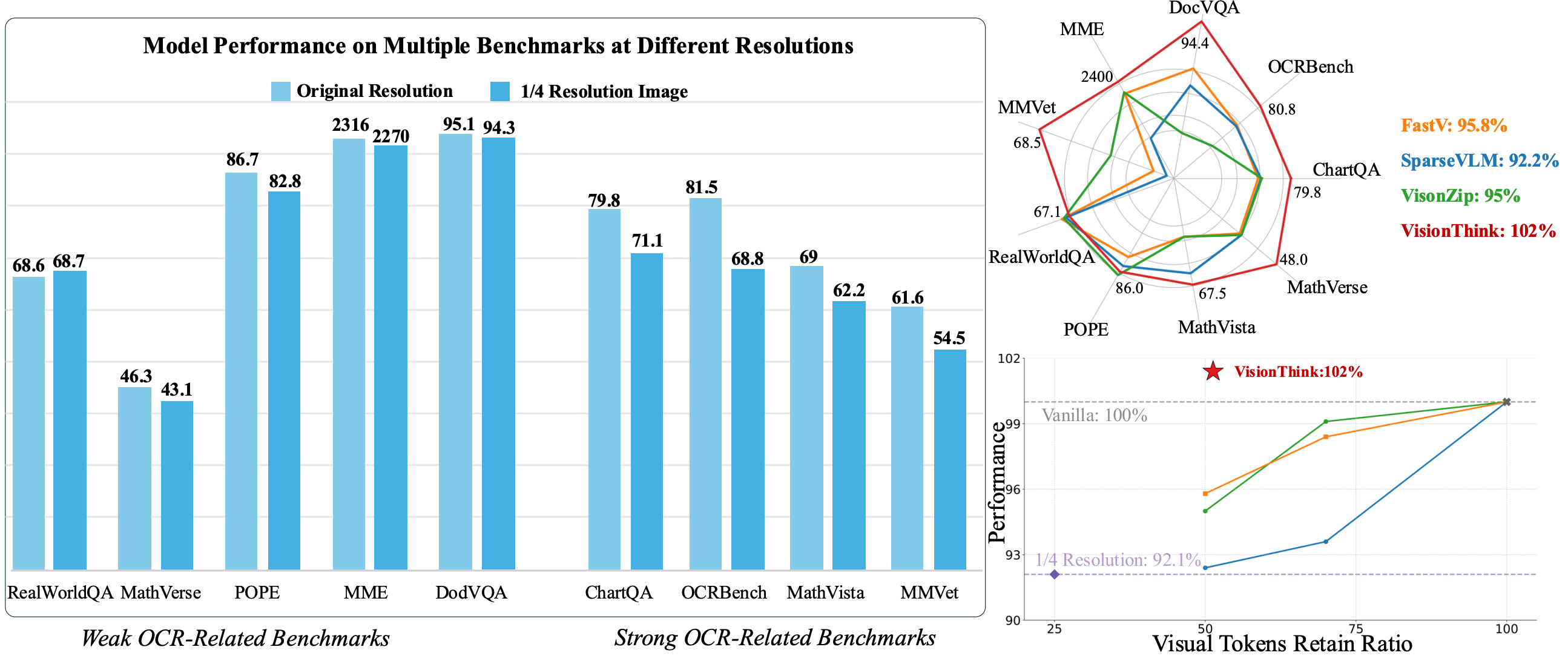

VisionThink: Smart and Efficient Vision Language Model via Reinforcement Learning

Senqiao Yang*, Junyi Li*, Xin Lai*, Bei Yu, Hengshuang Zhao, Jiaya Jia (* equal contribution)

Advances in Neural Information Processing Systems (NeurIPS) 2025 Poster

A new paradigm of efficient VLMs with token compression.

VisionThink: Smart and Efficient Vision Language Model via Reinforcement Learning

Senqiao Yang*, Junyi Li*, Xin Lai*, Bei Yu, Hengshuang Zhao, Jiaya Jia (* equal contribution)

Advances in Neural Information Processing Systems (NeurIPS) 2025 Poster

A new paradigm of efficient VLMs with token compression.

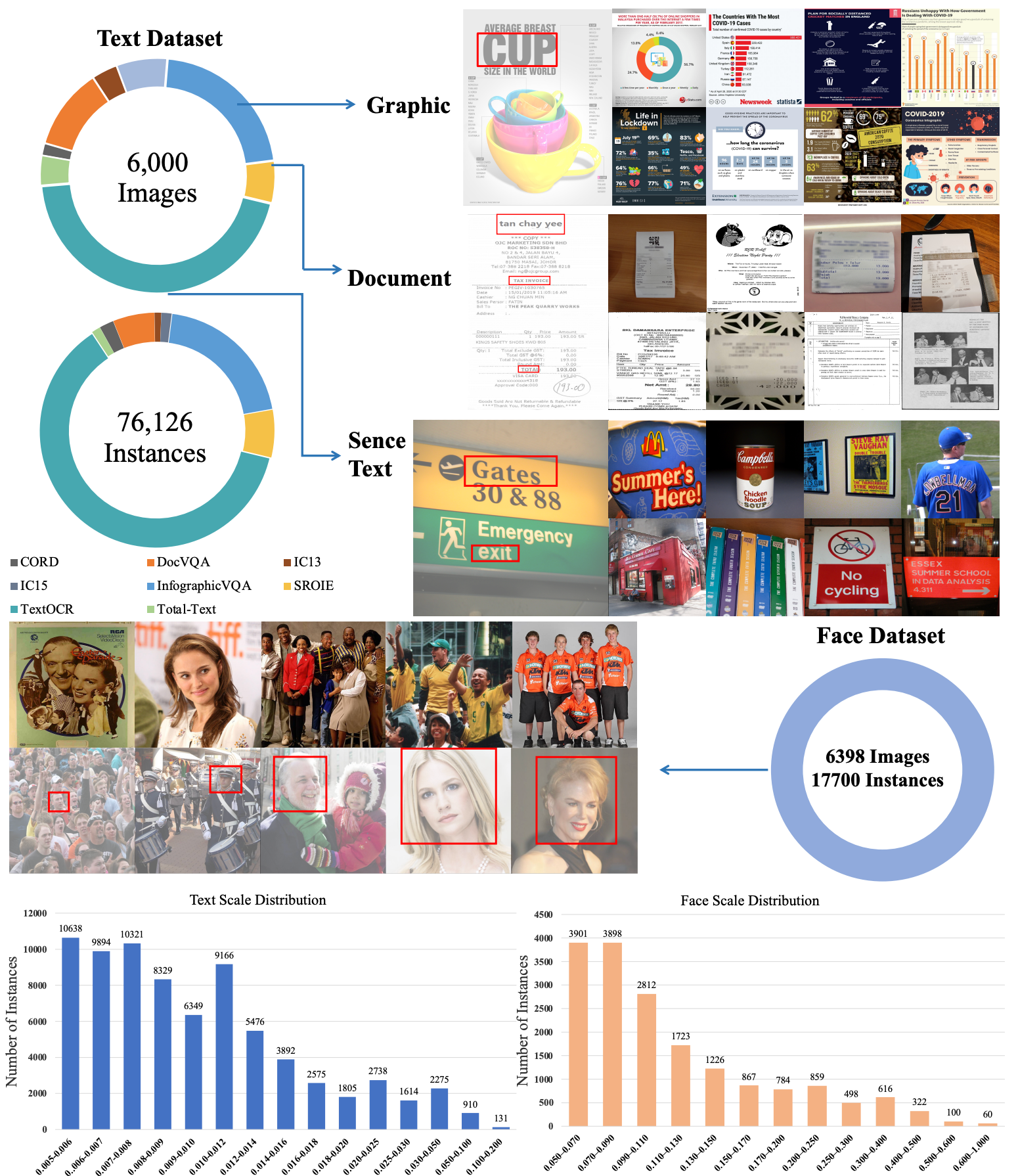

TokBench: Evaluating Your Visual Tokenizer before Visual Generation

Junfeng Wu*, Dongliang Luo*, Weizhi Zhao, Zhihao Xie, Yuanhao Wang, Junyi Li, Xudong Xie, Yuliang Liu, Xiang Bai# (* equal contribution, # corresponding author)

Under review. 2025

A simple Benchmark for evaluating your visual tokenizer.

TokBench: Evaluating Your Visual Tokenizer before Visual Generation

Junfeng Wu*, Dongliang Luo*, Weizhi Zhao, Zhihao Xie, Yuanhao Wang, Junyi Li, Xudong Xie, Yuliang Liu, Xiang Bai# (* equal contribution, # corresponding author)

Under review. 2025

A simple Benchmark for evaluating your visual tokenizer.

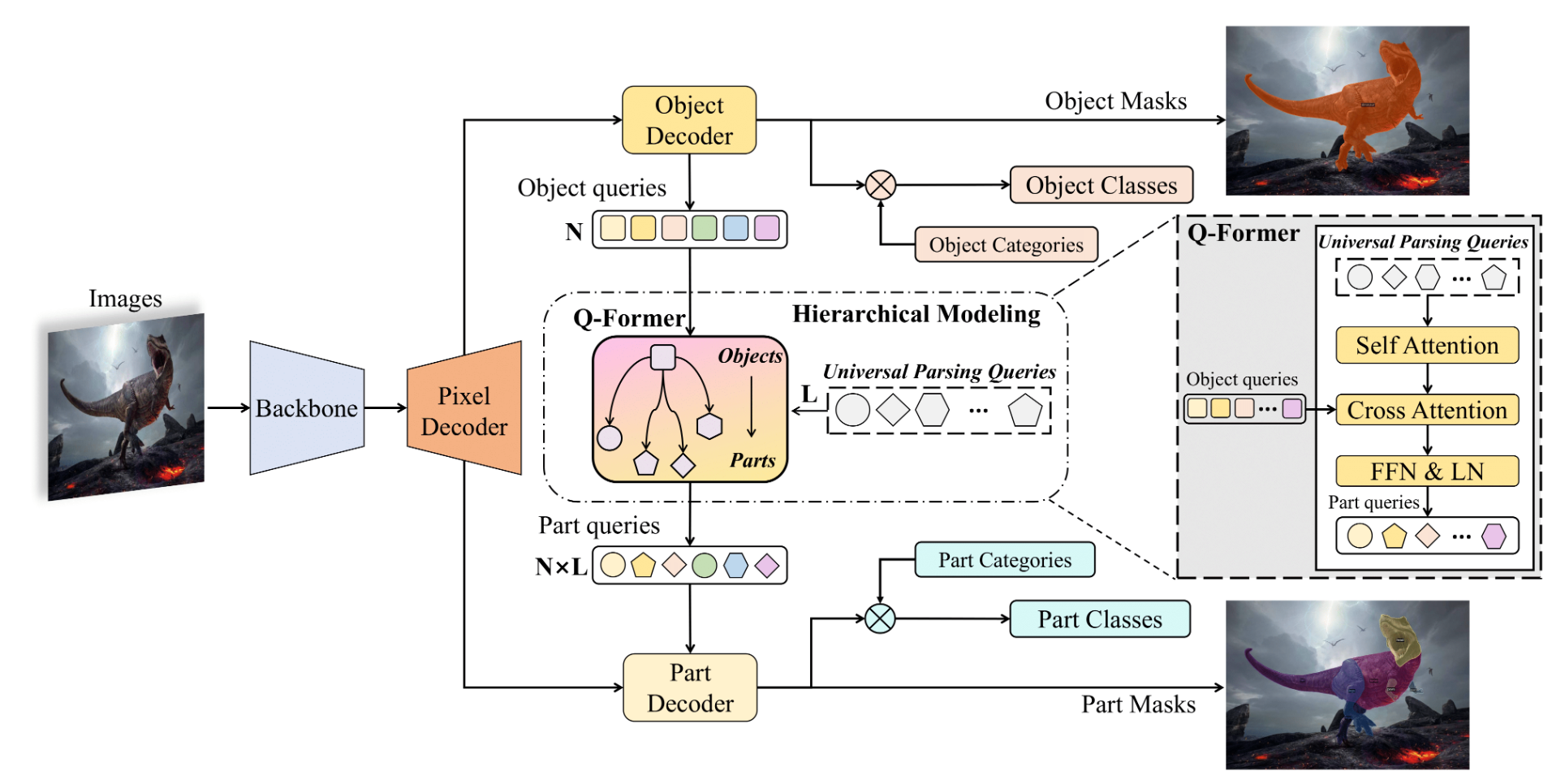

PartGLEE: A Foundation Model for Recognizing and Parsing Any Objects

Junyi Li*, Junfeng Wu*, Weizhi Zhao, Song Bai, Xiang Bai# (* equal contribution, # corresponding author)

Eurepean Conference on Computer Vision (ECCV) 2024 Poster

The first part-level foundation model for locating and identifying both objects and parts in images through a hierarchical framework.

PartGLEE: A Foundation Model for Recognizing and Parsing Any Objects

Junyi Li*, Junfeng Wu*, Weizhi Zhao, Song Bai, Xiang Bai# (* equal contribution, # corresponding author)

Eurepean Conference on Computer Vision (ECCV) 2024 Poster

The first part-level foundation model for locating and identifying both objects and parts in images through a hierarchical framework.